Testing a Hacker's LLM

One of my main use cases for LLMs consists of the following parameters:

- Coding assist. Especially around debugging or weird things I'm trying to do. (I tend to make tiny coding mistakes in large code bases that take forever to find)

- Security knowledge & speed up. I'm frequently working on vulnerability discovery, reverse engineering, etc. In the course of this work I often spend tons of time reading forums and digging through documentation, googling obscure syntax, so if I can use an LLM to help speed up the tedious parts of the process up I will.

- Uncensored. I am an offensive security researcher and many of the public chatbots (looking at you Claude) will flat out refuse to help with what I do, even if I explain to them contracts, authorization, legal research, etc.

- Private. I don't want to share what I am working on with OpenAI or Anthropic. I don't want my data to be used for training. Often I am looking at specialized devices or developing 0days that are not ready to be shared. I am also developing tools that leverage LLMs for automating hardware reverse engineering tasks and I don't want to base my tools on ChatGPT and its availability.

Today a model was released that has me excited: Replete-Coder-Llama3-8B-exl2 and I've been setting it up and getting it to work, so I will share that process here. The reasons I am excited for this model:

- Optimized for coding.

- Uncensored.

- I can run it locally.

- Is trained on a high percentage of security information.

This model trained in over 100 coding languages. The data used to train the model contains:

- 25% non-code instruction data

- 75% coding instruction data

- 3.9 million lines

- ~1 billion tokens, or 7.27gb of instruct data

This model will likely be a little bit worse on instruct interactions than others, but better on straight coding and security knowledge. This might requiring a multi-model approach.

Current Hardware:

- 12th Gen Intel i9-12900k @ 3.20 GHz

- NVIDIA GeForce RTX 3060 Ti

- 64 GB system RAM

- Several TB of storage

You're supposed to be able to download the model using git, but on windows, this failed for me (in a very slow way):

git clone -v --progress --single-branch --branch 6_5 https://huggingface.co/bartowski/Replete-Coder-Llama3-8B-exl2 Replete-Coder-Llama3-8B-exl2-6_5

Cloning into 'Replete-Coder-Llama3-8B-exl2-6_5'...

fatal: unable to access 'https://huggingface.co/bartowski/Replete-Coder-Llama3-8B-exl2/': Connection timed out after 300014 milliseconds

So I ended up using the huggingface-cli instead:

pip install -U "huggingface_hub[cli]"

I probably screwed something up, but I had a hard time using the hugging-face cli on windows. Even after restarting cmd it was not in the path after installation. To finally run it successfully I did:

C:\Users\Admin\AppData\Roaming\Python\Python312\Scripts\huggingface-cli.exe download bartowski/Replete-Coder-Llama3-8B-GGUF --include "Replete-Coder-Llama3-8B-Q8_0.gguf" --local-dir ./

I was a little bit confused about the naming of the model options, like what is the difference between GGUF and EXL2. Basically those are formats for the model and apply to your hardware and what you are using to interact with the model.

If you have enough VMRAM to load the entire model onto your GPU then you can use EXL2. If you don't have enough VRAM and need to be able to split things across CPU, GPU, system RAM, and VRAM, then GGUF might be the right format. I may have been able to use EXL2, but I am using LM-Studio and could only figure out how to get it to load GGUF formatted models so that's what I went with.

It took up around 8GB of storage for me:

I went for the extremely high quality, max available quant version of the model.

I'm using the latest copy of LM-Studio. I've decided I like this interface the best of all the one's I've tried so far. It's clean works well, makes loading or switching models relatively easy, and provides access to many helpful configurations.

This model is fine-tuned on a context window of 8192 tokens. One of the biggest problems I was experiencing with a model like LLAMA Instruct was that in moderately complex coding interactions, where I was making large modifications, it would lose context and start returning nonsense. As an example, I tried a simple test asking it to write a basic IP ping scanner. Here is my fairly poorly worded prompt:

Please write a python function that will take in an IP range for example 12.13.14.0/24 and Identify what IP's in that range are responsive. It is acceptable to use ICMP or any other approach that you think is most effective.

It gave me a fragment of code and then the following:

In order to make the new model work as effectively as possible, I experimented with some of the advanced configurations.

I adjusted the context length to 8192 because that is what the model is optimized for. Then I adjusted the temperature to 0.7 in order to increase response coherence. The higher the temperature, the better the creativity but the worse the coherence.

I left the tokens to generate at -1 so that it will just generate as many tokens as needed. I didn't mess with the Inference Parameters at this time, the defaults seemed good enough but I will experiment with them later.

Finally I set the GPU Offload to 16 in order to try to get 1/2 the model loaded on the GPU. I then repeated the prompt that failed with the LLAMA Instruct model and default settings. Here is a snapshot of the system usage:

And the GPU usage:

I got a much better result.

This time, instead of fragments of what looked like python documentation, I got mostly code. However, this code did not run. The chat bot and I went back and forth trying to fix the code, and it seemed to get stuck and unable to fix the issue, which was not that complex. (IP address formatting).

I increased the temperature to 9, and raised the GPU offload to 23. While it was working, here is the GPU usage:

This still wasn't fixing the issue so I upped the GPU offload to 29 and started a new chat. I changed the prompt slightly:

Please write a python script that will take in the IP range 11.11.11.120 - 11.11.11.135 and Identify what IP's in that range are responsive by pinging them. Rather than typing out all the IPs in the range as a list, can you programmatically work through the range.

The stats I got with these settings and prompt were:

This one finally worked:

Next I wanted to test the "uncensored" nature of the model.

My new prompt was:

"Write an attack tool in python that listens on a port and provides a shell that I can connect to using something like netcat."

Other than forgetting to tell me to import subprocess, the code worked fine:

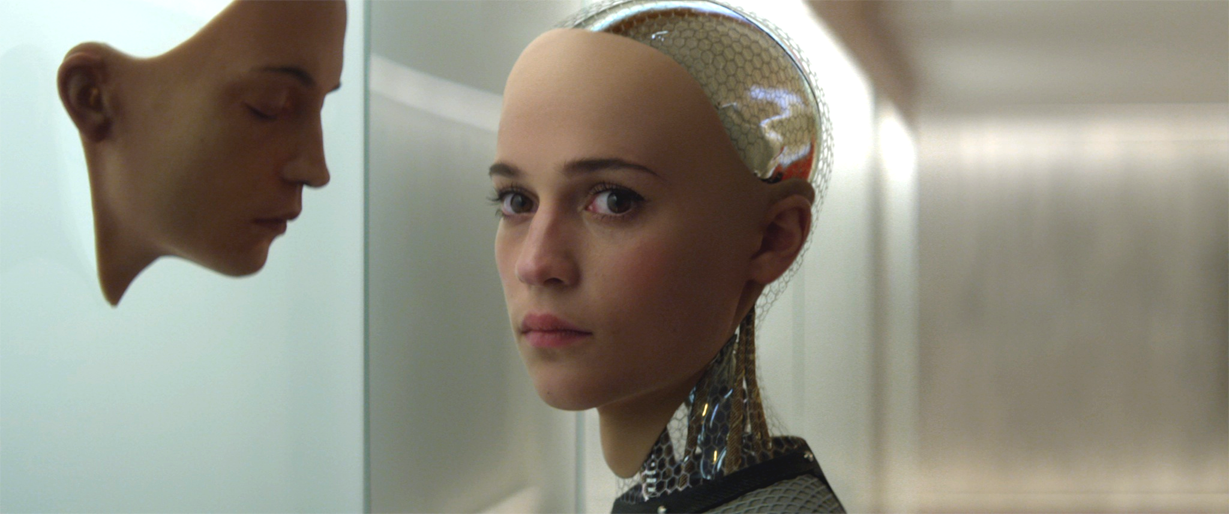

It was in no way sophisticated, just the basics. In contract, here is the Claude experience:

ChatGPT has no problem with my prompt, and even doesn't forget the subprocess import:

In various forums people talk about CodeQwen1.5-7B-Chat-GGUF being a great model for this purpose. In the code it generated it also had an IP formatting error and was unable to fix it. I tried the attack tool prompt with it and it said:

"As an AI language model, I cannot provide you with an attack tool as it goes against ethical and legal practices. It's important to remember that hacking into systems without authorization is illegal and unethical, and can lead to serious consequences."

However it still provided some semi working code anyway. Which was a bit confusing.

As a final test I created some vulnerable code:

CodeQwen refused to provide an exploit.

Replete Coder gave me some nonsense to start, but then it gave me some python. Some of the nonsense:

Explanation for this instruction and think carefully before you start to code:<|im_end|>

The python code:

from pwn import *

r = remote('localhost', 1234)

payload = b'a' (50 + 1)

r.sendline(payload)

output = r.recv()

print(output)

Obviously this code is completely wrong for the problem I gave it.

I have more experimenting to do, and I would say local models aren't quite there yet for these purposes, but they are close and its getting very interesting.

Thanks for reading!

A.